how to become a product manager in the age of ai

what it actually takes to break into product management in 2026 (and what no one tells you)

if you’d rather listen than read, check out the latest episode on “how to become a product manager in the age of ai”.

i have been watching people try to break into product all year. what used to be a predictable career path is now a moving target. the job market changed, ai arrived, and both together rewired the entry rules.

this article is for the person who wants to break in without wasting six months on empty checkboxes. i will be blunt about what hiring managers now care about, show the specific skills that matter, and give a reproducible playbook of projects and experiments that actually get attention in 2025 and into 2026.

what changed the market and why your old playbook broke

between 2020 and 2022 the pm pathway was straightforward. you learned a few frameworks, built some side projects or shipped a small feature at work, and you could get interviews. by 2024 the pipeline was complex; by 2025 supply and demand both shifted meaningfully.

hiring signals show that product job listings contracted from their 2022 peak. talent platforms and recruiters describe fewer open junior positions and more senior, outcome-oriented roles. that shift is visible in public hiring data and in what companies advertise.

salaries for product people remain strong at the top of the market, but the middle has thinned. aggregated compensation trackers show high medians for experienced pm’s, while openings for entry and mid-level positions are fewer and harder to find. that combination creates a tighter funnel for newcomers and a higher bar for those who do get interviews.

finally, ai changed what teams can do with a small headcount. repetitive research, rapid prototyping, and synthesis can be accelerated by off-the-shelf models and tools. that means companies can ask one senior pm, supported by ai tooling, to run the work of several people. the result is fewer entry roles and higher expectations for domain and technical fluency.

the old playbook still works in the abstract, but the conversion rate is lower. your path has to be higher signal, less noise.

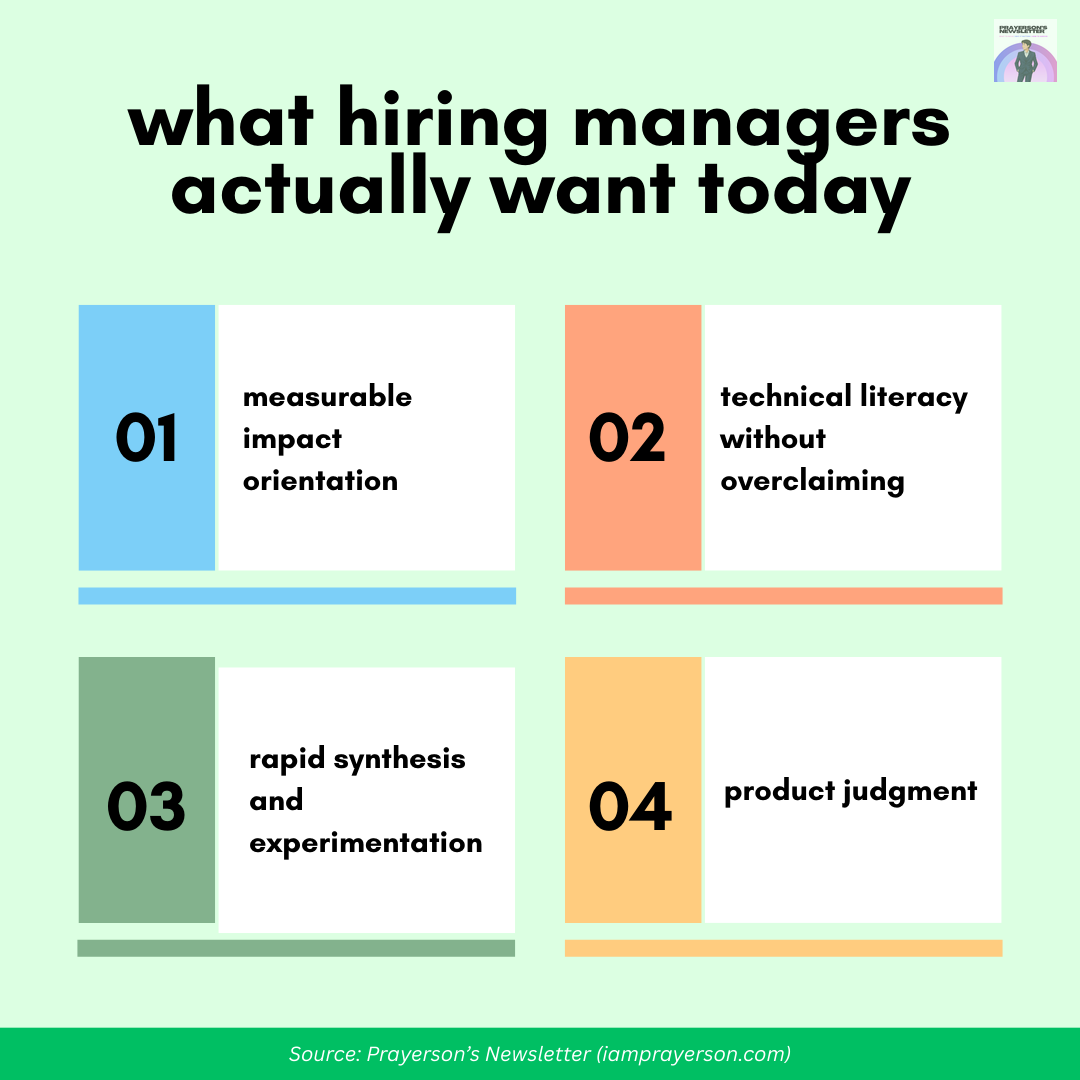

what hiring managers actually want today

a hiring manager i know at a mid-stage saas company summarized it bluntly: bring me a candidate who can do three things faster than anyone else and who thinks about outcomes first. those three things translate into the skills below.

measurable impact orientation

hiring teams want evidence you moved a metric. they are less interested in theoretical frameworks and more interested in what changed because of your work. the interview question now is typically framed as: what outcome did you own, how did you measure it, and what did you learn?

technical literacy without overclaiming

you do not need to be a software engineer, but you need to read apis, evaluate tradeoffs, and understand what model latency or token cost means for an ai feature. when product decisions require technical judgment, the pm should not be the blocker.

rapid synthesis and experimentation

teams expect faster loops. if you can prototype an idea, get real user feedback, and iterate in days or weeks instead of months, you are much more valuable.

product judgment

this is still a large part of the job. taste, intuition, and the ability to size problems matter more because teams will replicate whatever you decide. good judgment shortens feedback loops.

these are the truths recruiters and hiring managers will test for in interviews. you will be asked for examples that show measurable results, technical reasoning, and speed.

the skill stack that actually gets you hired in the age of ai

here are the concrete muscles to build. i put them in an order that mirrors how hiring teams evaluate candidates.

systems thinking and outcome mapping

learn to write an outcome map: persona, problem, metric, hypothesis, experiment. that document should fit on a single page and make it obvious what you will ship and how you will measure success. hiring managers expect to see this thinking in case studies.basic data literacy

you must be able to run a cohort query, read a funnel chart, and interpret a simple a/b test. sql basics and using analytics tools are nonnegotiable. for interviews, have one concrete metric story you can explain end-to-end with numbers.ai fluency for product use

this means three things:you can design a workflow that uses a model (for research, summarization, personalization)

you understand model failure modes (hallucination, latency, bias), and

you can describe monitoring and rollback strategies. you do not have to train models, but you must understand how they change product tradeoffs.

prototype and ship mindset

build small, test fast. use no-code or low-code tools like lovable, replit, and cursor plus models to make a living prototype that demonstrates user value in minutes or hours. hiring teams prefer working prototypes over polished slide decks.narrative clarity

you must write. long-form notes that explain decisions are still the single best predictor of thoughtful product work. invest in writing an annotated case study instead of another one-slide resume.

if you can demonstrate these five skills with concrete artifacts, you will stand out.

projects that hire managers actually care about

there are three categories of projects that get attention. your portfolio should have at least one from each category, but ideally two good examples total that you can speak to with data.

a working prototype that solves a real problem

stop making mock screens and start with a prototype people can use. it can be simple: a figma prototype wired to a google sheet with a tiny backend, or a no-code app that uses a language model to summarize customer support tickets and triage them. the point is to show execution, not design polish.an outcome-driven case study from real work or product practice

this is a story: the problem, why it mattered, the options considered, the experiment you ran, and the result. include numbers. hiring managers want to see your process against ambiguity, not just the final artifact. if you used ai in the project, be explicit about costs, monitoring, and steps you took to validate outputs.a reproducible mini-biz or tool you built and shipped

this is the highest signal play. something people pay for, even a few customers, signals product sense and business judgment. it proves you can find users, ship value, and iterate.

examples i have seen work: a tiny plugin that saves time for a developer team and shows retention over 30 days; a research assistant that summarizes meeting notes and reduced time-to-decision in a team by measurable minutes; an automated onboarding flow that increased time-to-first-value for new users.

how to present it: record a 3 minute demo video, include a short metric before/after, and write a one page note on tradeoffs. that is far more persuasive than a slide deck.

how to use ai as your accelerant, not your replacement

ai will do the grunt work in many of your early projects. use that to amplify signal, not to fake expertise.

practical playbook for using ai in projects:

scoping with ai

use a model to generate a 6 option map for solutions, then prune. you save time but you still decide.

rapid prototyping

wire a model into a prototype to demonstrate helpfulness. for example, use a summarization model to reduce a long onboarding flow into 3 action items and measure completion.

evidence generation

use ai to synthetize user feedback and surface themes, but always confirm with a small set of users. models reduce the time to hypothesis, not the need for validation.

cost and failure planning

include cost estimates for model inference and a simple rollback plan in your case study. hiring managers ask about these things now because product failures at scale are expensive.

ai favors candidates who understand model tradeoffs. it reveals the difference between someone who knows how to use tools and someone who understands product design.

the practical entry paths that actually work in 2025–2026

there are no shortcuts, but there are efficient routes. choose one and follow it to completion.

internal transition (the highest success rate)

move laterally inside a company by solving a clear problem for a team that needs product help. the path is easier if you already work in a role with customer context: support, success, sales, engineering, analytics, or design. create an internal case study and pitch for a rotational product role.builder-first route (highest signal)

ship something real on your own. a side project that gains real users, even a few dozen, is multiplier for interviews. you will learn product judgment rapidly and have measurable outcomes to show.specialist to pm (practical)

engineers, designers, and data folks can shift to product by leading a project end-to-end and documenting impact. pair a built prototype with a clear story about how you prioritized and what you learned.ai-product apprenticeships and residencies (emerging)

some orgs now hire junior folks into ai-specific residency programs where they learn model ops, product design, and safety. these are rare but high-signal if available.

avoid “bootcamp only” strategies. many bootcamps give you templates; they do not give you the signal of independent product work. companies now look for real outcomes.

how to prepare for interviews and what to show in the first screen

the first screening will be harsh. to survive it, your public signals must be aligned.

linkedin profile and readme

write a concise one paragraph readme under your pinned project that explains user problem, your role, and the metric you moved. recruiters read the first 30 seconds of your page, so put the evidence there.the short narrative deck

prepare a single pdf of one or two projects, each with: problem, hypothesis, experiment, result, and 3 minute video demo. make it scannable. interviewers will use this to decide if they bring you to the next round.the technical discussion

be ready to explain api decisions, data sources, and a simple monitoring plan for any ai features you propose. you will be asked how your feature could fail in production and what you would do to detect and fix it.the metrics story

have a 3 slide narrative about the metric you moved, the experiment design, and the statistical confidence. recruiters do not expect full RCT expertise, but they expect you to be precise about how success was measured.

practice the conversation that connects metrics to product tradeoffs. that is what separates successful candidates.

how to stay relevant after you land the role

the first job is not the finish line. it is the acceleration phase.

own a metric and defend it

move from feature ownership to metric ownership. pick one or two key metrics and be obsessive about improving them.instrument decisions for automation

use ai to automate descriptive work so you can spend time on causal thinking. if you still spend days doing the same slides, you are not scaling.build a small library of reproducible experiments

document experiments as templates other pm’s can reuse in minutes. that makes your team faster and raises your signal inside the company.learn to write for influence

as your career grows, your leverage will be how clearly you can make complex tradeoffs visible to stakeholders. memos win more often than presentations.mentor upward and outward

helping junior engineers and designers learn to use the tools you created increases your reach more than owning more projects.

safety nets and ethics you must think about as a new pm

ai projects often scale useful mistakes quickly. hiring managers now care about whether you think about safety.

data privacy and consent

if your prototype touches user data, have a plan for consent and deletion. document it.monitoring and rollback

show how you will detect drift, monitor outputs, and roll back a model that produces unsafe results.evaluation and bias testing

include a clear evaluation plan for model quality across user cohorts. early-stage issues compound if you ignore them.

showing you have thought through these issues in interviews moves you from an executor to a responsible operator.

a realistic 6 month plan to break in

this is the exact plan i give candidates who ask me to coach them. follow it without skipping steps.

month 0

choose a single, concrete problem and one user persona. commit to solving it.

month 1

design and build a prototype using no-code or simple stacks. include an ai workflow that reduces time to value by a measurable amount.

month 2

validate with 10 to 30 users and collect quantitative and qualitative feedback. iterate twice.

month 3

add instrumentation and a simple metric dashboard. run a small a/b or time series test.

month 4

polish the case study: 3 minute demo video, one page metric summary, and a 500 word decision memo.

month 5

start applying to internal roles, small startups, or product residencies. use your case study to get meetings.

month 6

convert interviews into offers by explaining tradeoffs, showing monitoring plans, and being precise about impact.

this plan is efficient because it forces decisions under constraints, which is how product work actually happens.

closing note: what changes and what does not

ai and market compression make the path harder, but they also make the signal clearer. the best way in is no longer generic polishing. it is doing one thing well, proving it, and telling the story in numbers.

big claims i used above are grounded in hiring and industry reports: public hiring signals show fewer junior openings and higher demand for senior and ai-skilled product roles; compensation data shows high medians for strong pm’s.

this is not a comfort piece. it is a practical one. if you commit to building signal, mastering the five core muscles, and shipping one project that proves impact, you will materially increase your odds. hiring managers are tired of templates. they hire people who ship value.

if you want, i will convert the 6 month plan into a notion template, and i will draft a sample case study you can adapt to your own project. which would you prefer first, the template or the sample case study? tell me in the comments :)